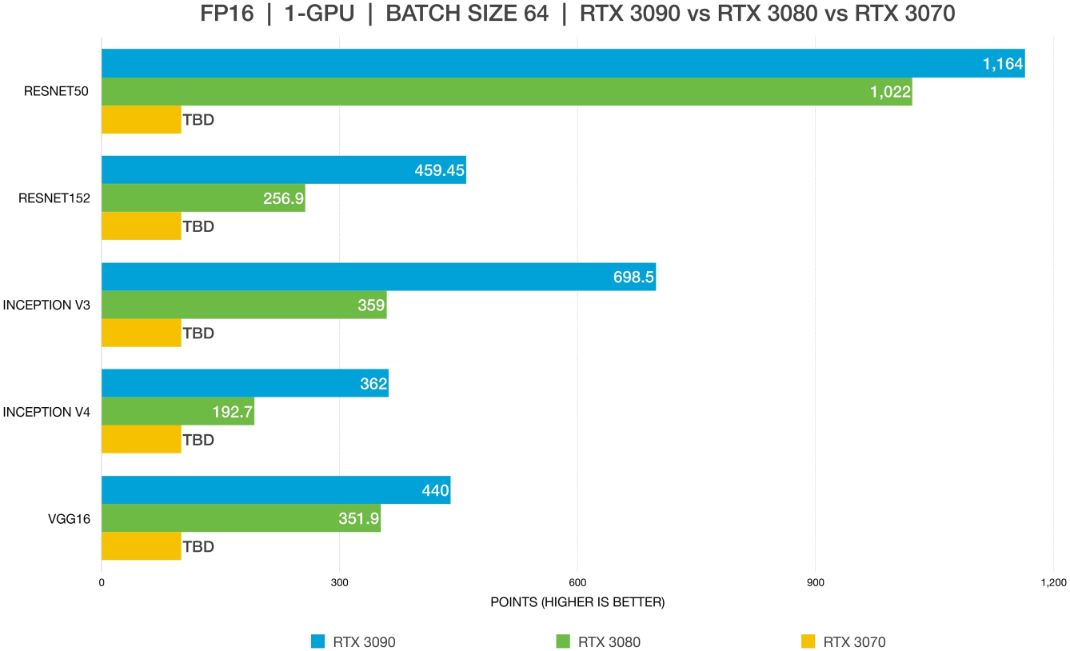

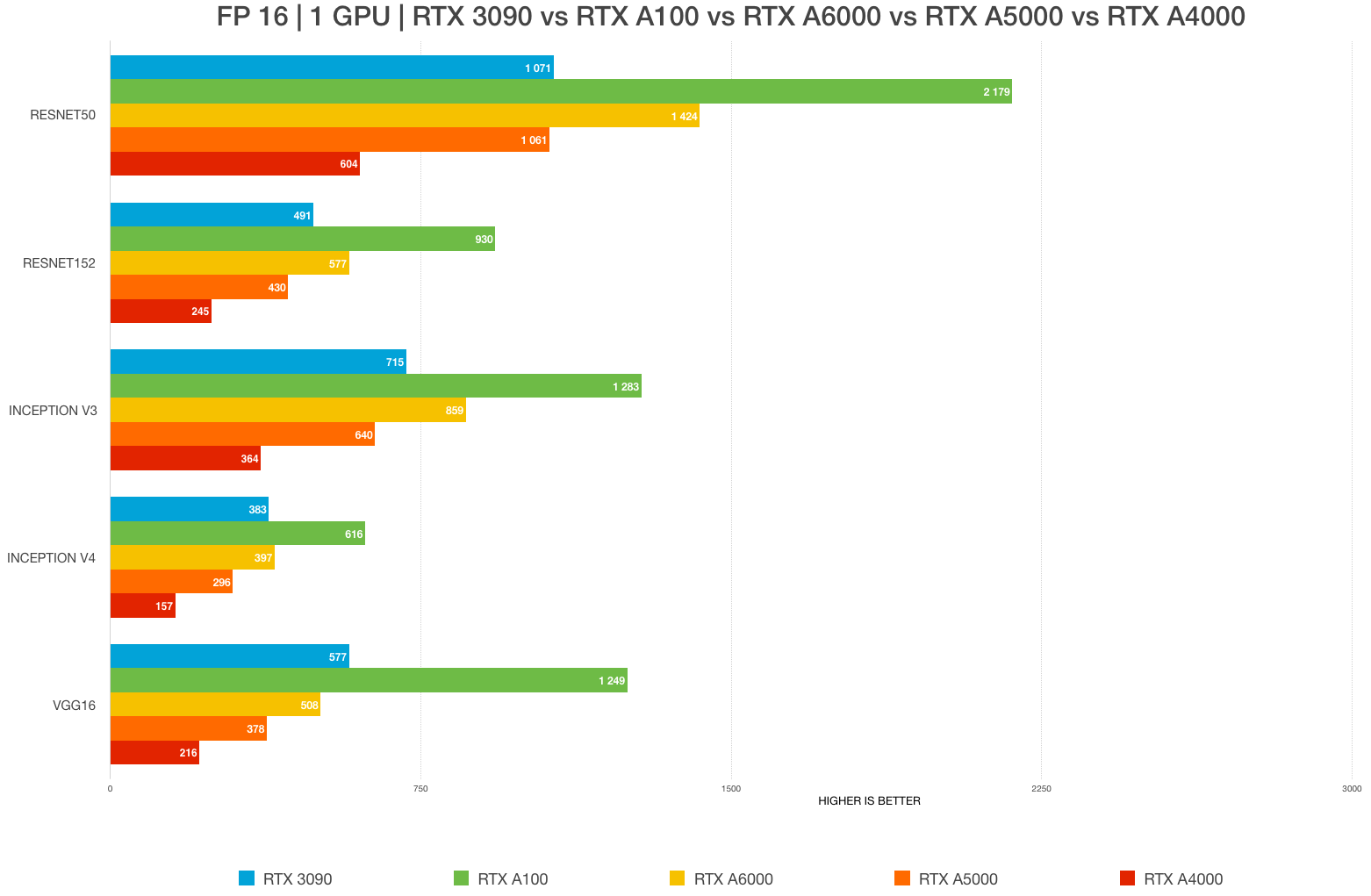

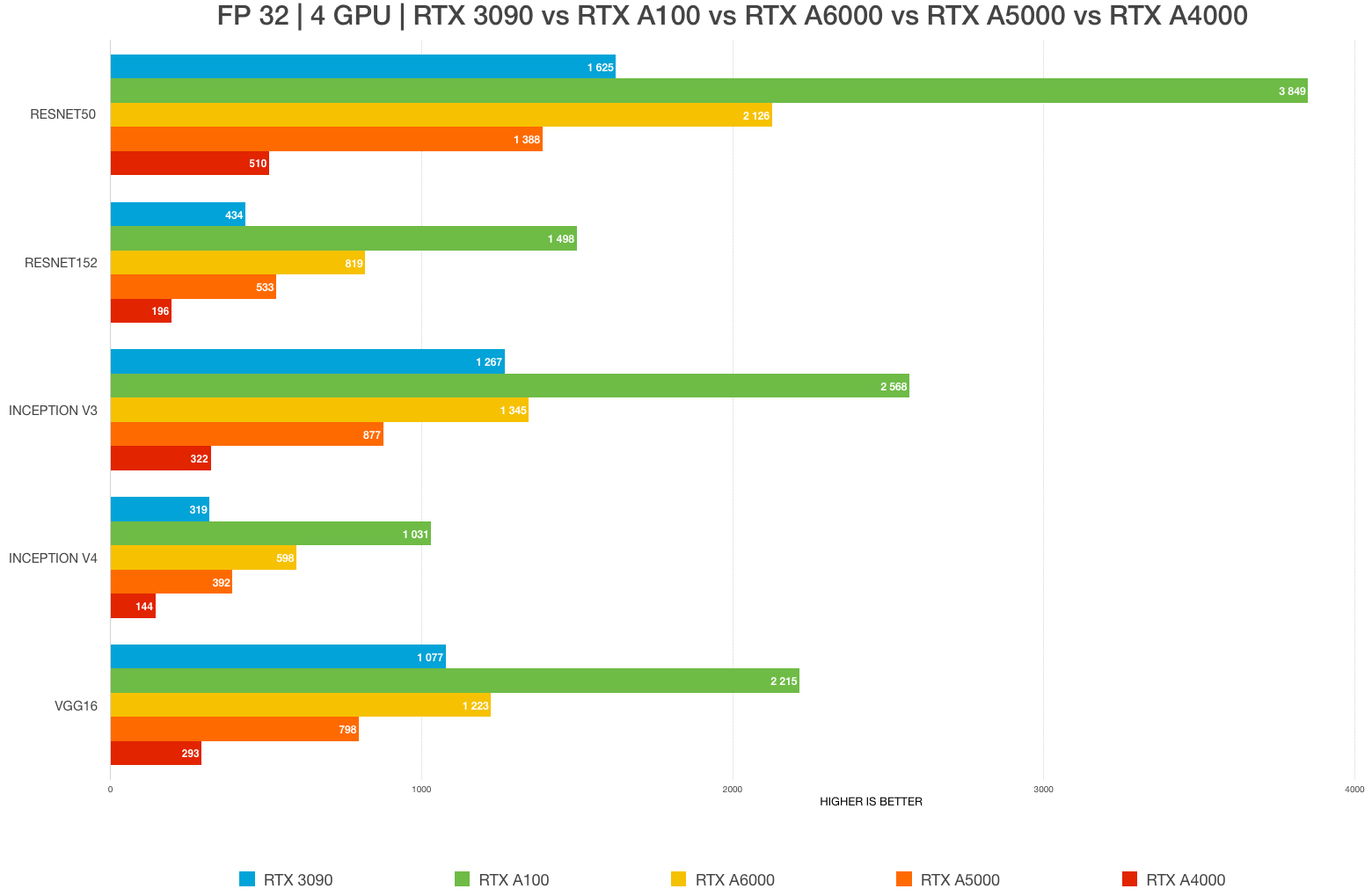

Best GPU for AI/ML, deep learning, data science in 2023: RTX 4090 vs. 3090 vs. RTX 3080 Ti vs A6000 vs A5000 vs A100 benchmarks (FP32, FP16) – Updated – | BIZON

Beyond CUDA: GPU Accelerated Python for Machine Learning on Cross-Vendor Graphics Cards Made Simple | by Alejandro Saucedo | Towards Data Science

Deep Learning vs Machine Learning Challenger Models for Default Risk with Explainability | NVIDIA Technical Blog

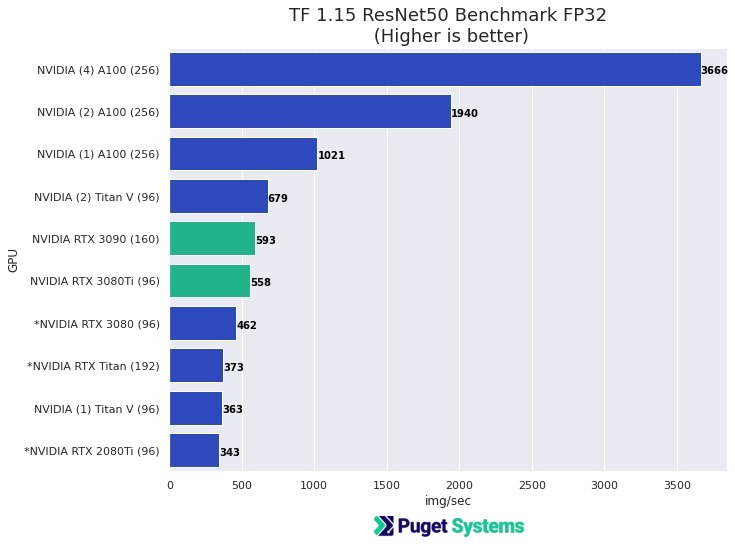

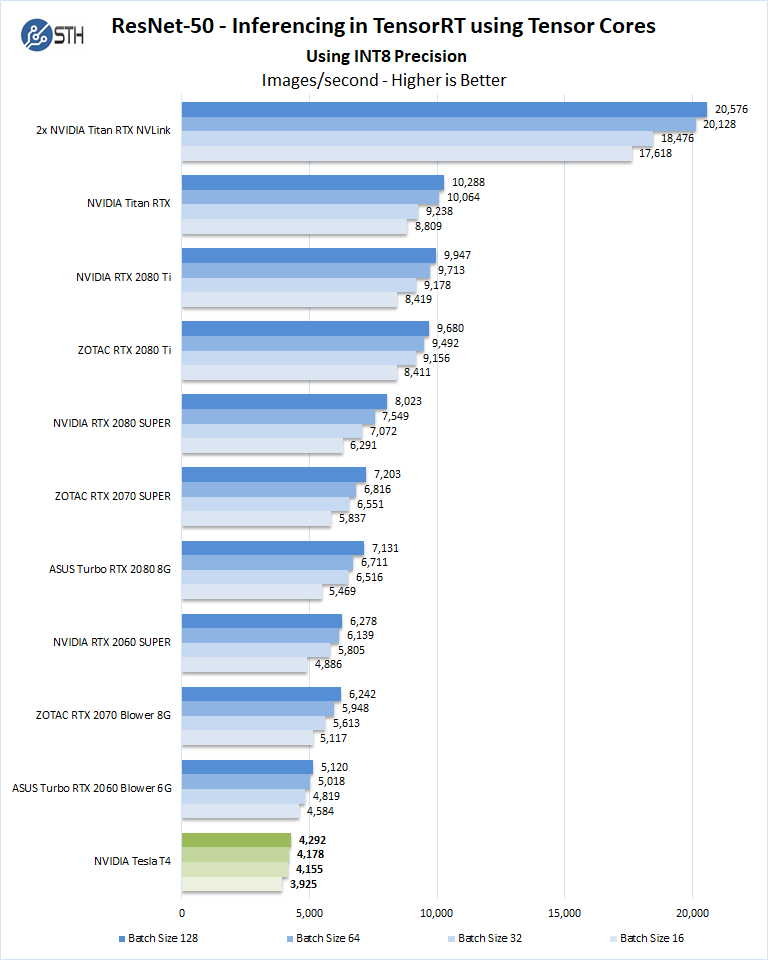

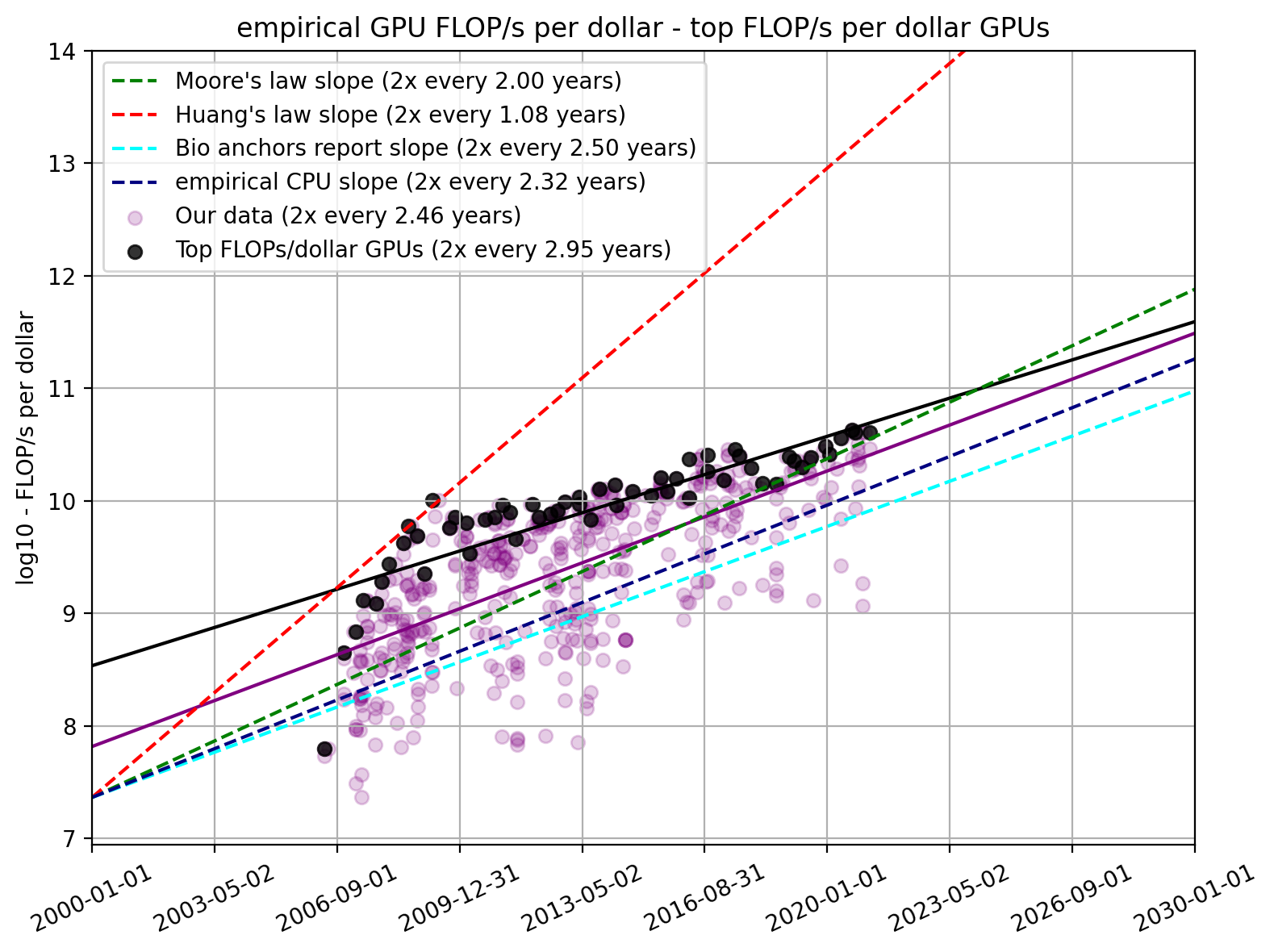

Best GPU for AI/ML, deep learning, data science in 2023: RTX 4090 vs. 3090 vs. RTX 3080 Ti vs A6000 vs A5000 vs A100 benchmarks (FP32, FP16) – Updated – | BIZON

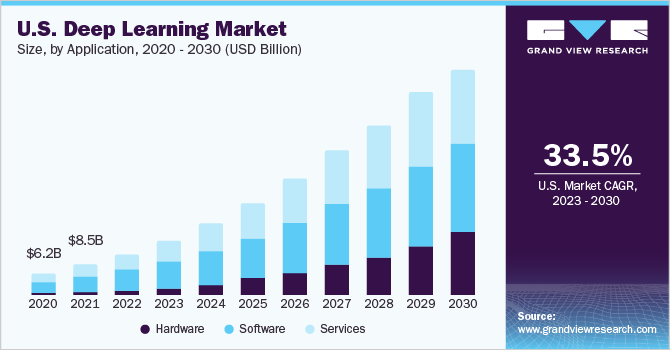

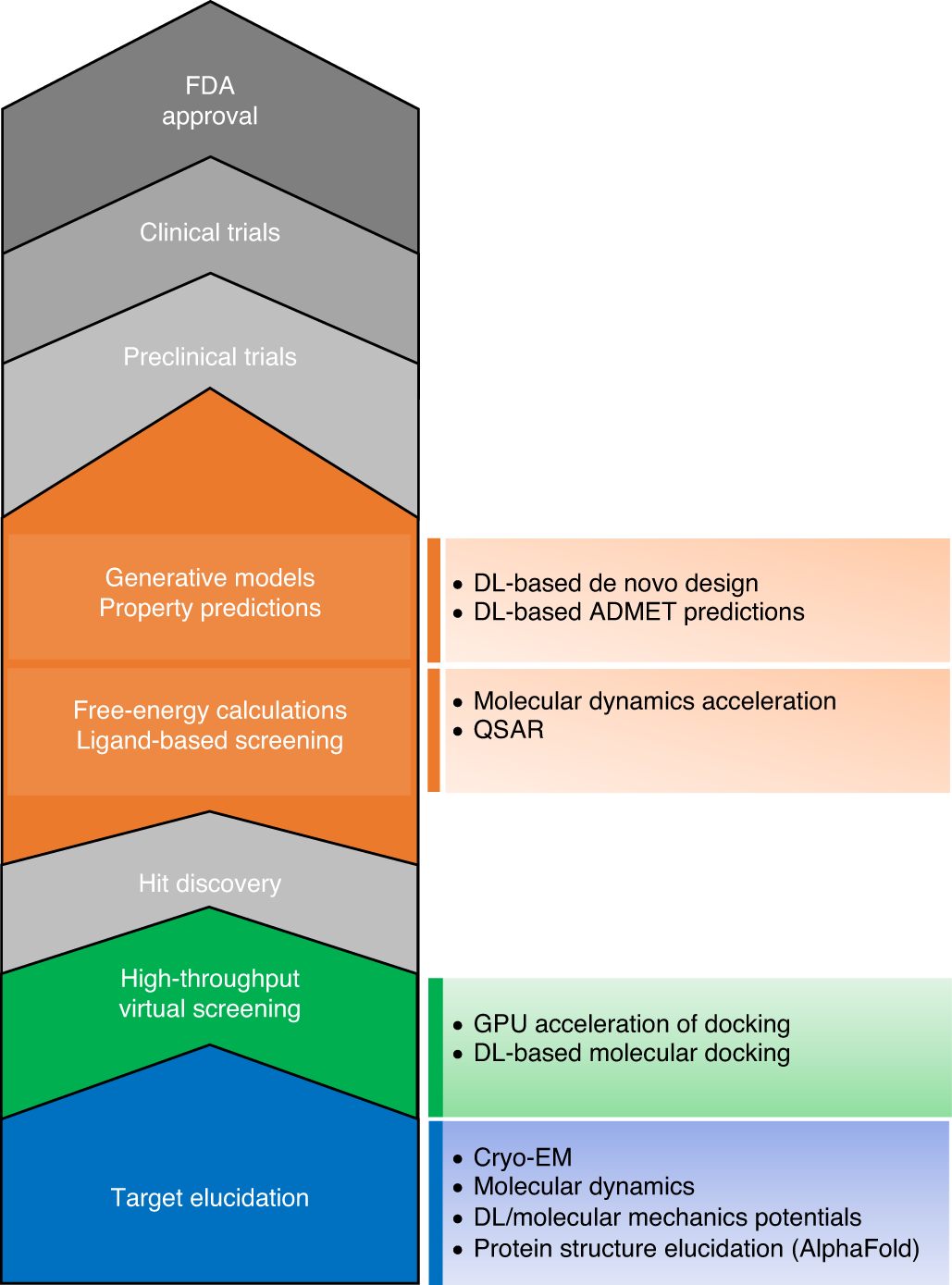

The transformational role of GPU computing and deep learning in drug discovery | Nature Machine Intelligence

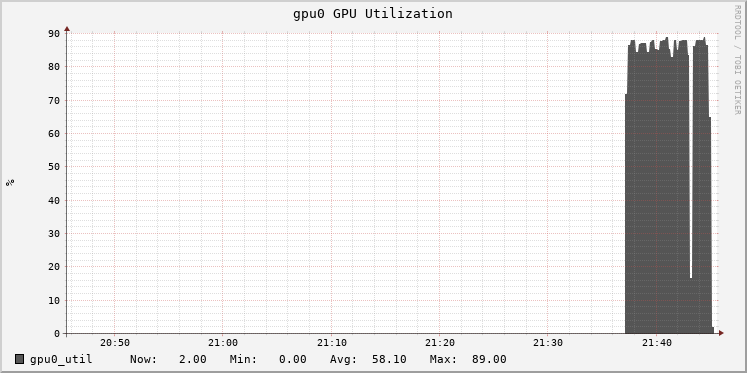

Monitor and Improve GPU Usage for Training Deep Learning Models | by Lukas Biewald | Towards Data Science